My main research has been focusing on improving mobile computing and enabling new gerne of immersive mobile applications leveraging power of cloud computing. Cloudlet is the core software system to achive this goal and the followings introduces the basic idea of Cloudlet research.

Cloudlet

A cloudlet is a new architectural element that arises from the convergence of mobile computing and cloud computing. It represents the middle tier of a 3-tier hierarchy: mobile device --- cloudlet --- cloud. A cloudlet can be viewed as a "data center in a box" whose goal is to "bring the cloud closer". A cloudlet has four key attributes:

-

only soft state:

It is does not have any hard state, but may contain cached

state from the cloud. It may also buffer data

originating from a mobile device (such as video or

photographs) en route to safety in the cloud. The

avoidance of hard state means that each cloudlet adds close

to zero management burden after installation: it is

entirely self-managing.

-

powerful, well-connected and safe:

It possesses sufficient compute power (i.e., CPU, RAM, etc.)

to offload resource-intensive computations from one or more

mobile devices. It has excellent connectivity to the

cloud (typically a wired Internet connection) and is not

limited by finite battery life (i.e., it is plugged into a

power outlet). Its integrity as a computing

platform is assumed; in a production-quality implementation

this will have to be enforced through some combination of

tamper-resistance, surveillance, and run-time

attestation.

-

close at hand:

It is logically proximate to the associated mobile devices.

"Logical proximity" is defined as low end-to-end

latency and high bandwidth (e.g., one-hop Wi-Fi).

Often, logical proximity implies physical proximity.

However, because of "last mile" effects, the inverse may not

be true: physical proximity may not imply logical

proximity.

-

builds on standard cloud technology:

It encapsulates offload code from mobile devices in

virtual machines (VMs), and thus resembles classic cloud

infrastructure such as Amazon EC2 and OpenStack. In

addition, each cloudlet has functionality that is specific to

its cloudlet role.

Effect of Cloud Location

To see the effect of Cloud location, we compared application's

performance between Cloudlet and Cloud. The YouTube videos shows

an Android front-end application with a compute-intensive back-end

offloaded to an Amazon EC2 cloud or a cloudlet. As a user moves his

smartphone, its accelerometer senses the movement and communicates

the readings to a graphics engine in the back-end. This graphics

engine uses physics-based simulation of particle movements in a

fluid (Reference: SOLENTHALER, B., AND PAJAROLA, R.

"Predictive-corrective incompressible SPH". ACM Transactions on

Graphics 28, 3 (2009)). The results of the simulation are

periodically rendered on the smartphone, giving the illusion of a

liquid sloshing around. The end-to-end latency between the

front-end (sensing and display) and back-end (simulation)

determines quality of the graphics. As latency increases, the

quality of the graphics degrades into jerky or sluggish motion.

The output frame rate (in frames per second (FPS)) is a good metric

of graphics quality.

Cloudlet vs Cloud - The impact of latency in mobile application

Each of individual video is available at the following URLs.

- Cloudlet (48 FPS, 29 ms end-to-end latency)

- Amazon EC2 US-East (39 FPS, 41 ms)

- Amazon EC2 US-West (15 FPS, 120 ms)

- Amazon EC2 EU (11 FPS, 190ms)

- Amazon EC2 Asia (5 FPS, 320ms)

"Bringing the cloud closer" also improves the survivability of

mobile computing in hostile environments such as military

applications and disaster recovery. Easily-disrupted

critical dependence on a distant cloud is replaced by dependence

on a nearby cloudlet and best-effort synchronization with the

distant cloud. The paper "The Role of Cloudlets in Hostile Environments"

explores these issues.

Cognitive Assistance

Cloudlets are the enabling technology for

a new genre of resource-intensive but latency-sensitive mobile

applications that will emerge in the future. These include new

cognitive assistance applications

that will seamlessly enhance a user's ability to interact with the

real world around him or her (See more details at Gabriel Paper).

Here is an early thought piece on

augmenting cognition

and the following shows a cool demo of the very first wearable

cognitive assistance application that we have built.

Lego Assistant

Open Source

Elijah-related open source software comes in two parts. The first part is a set of cloudlet-specific extensions to OpenStack (http://openstack.org). By applying these extensions, OpenStack becomes "OpenStack++". These extensions are released under the same open source license as OpenStack itself (Apache v2). The second part is a set of new mobile computing applications that build upon OpenStack++ and leverage its support for cloudlets. All Elijah-related source code is in GitHub. The links below help you access the various components.

OpenStack++

- Component overview:

https://github.com/cmusatyalab/elijah-cloudlet

- Dashboard extensions:

https://github.com/cmusatyalab/elijah-openstack

- Rapid VM provisioning:

https://github.com/cmusatyalab/elijah-provisioning

Relevant publication: "Just-in-Time Provisioning for Cyber Foraging"

- Cloudlet discovery:

https://github.com/cmusatyalab/elijah-discovery-basic

Release note: "The first public release of cloudlet-discovery project"

- QEMU modifications for Elijah:

https://github.com/cmusatyalab/elijah-qemu

Applications that build on OpenStack++

- Gabriel:

https://github.com/cmusatyalab/gabriel

- Related publication: "Towards Wearable Cognitive Assistance"

- GigaSight:

"https://github.com/cmusatyalab/GigaSight"

- Related publication: "Scalable Crowd-Sourcing of Video from Mobile Devices"

- QuiltView:

https://github.com/cmusatyalab/quiltview

- Related publication: "QuiltView: a Crowd-Sourced Video Response System"

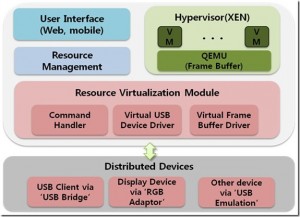

Virtual Machine Technology for System on-Demand Service

A framework that provides “on demand computing environment” by virtualizing computing resources and peripherals. My contribution focused on designing and developing resource virtualization and I/O data transmission protocols over the IP network. And I implemented a virtual device management module that works with QEMU-DM and the corresponding client software available on iPhones and PCs.

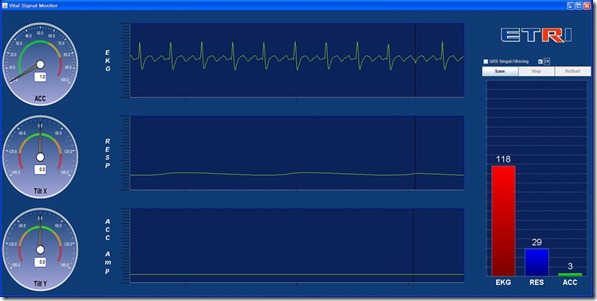

Context-Aware Platform for Healthcare

This project set forth to create a context-aware system based upon health information. Our team developed wearable healthcare monitoring devices in the forms of a wrist-watch, chest-band, and necklace. Using the vital signals such as ECG, PPG gathered from the wearable device, I devised vital signal processing algorithms to noninvasively calculate heart rate and blood pressure. At the end of the project, the technology was successfully transferred to industry and is being prepared for a commercial offering.